The Arc/k Project: Technology

The Arc/k Project is an early-adopter and innovator in technology to digitally preserve cultural heritage in 3D and Virtual Reality. Arc/k aims to move the field forward with approaches to using technology which facilitate access, expand resources, and/or increase archiving productivity. 3D digital archives can be used in both virtual and augmented reality for a variety of uses in educational, academic, and general settings.

While Arc/k remains open to any type of technology that can be used to digitally archive culture, we do believe that photogrammetry is a particularly unique fusion of mathematics, photography and computer graphics which lends itself well to the citizen scientist model that Arc/k has been promulgating. With photogrammetry, Arc/k is able to make hyper-accurate 3D digital archives, creating an authentic snapshot in time as well as a baseline for future renovation, repair, and preservation. By offering a virtual reality through the synthesizing of digital imaging, cultural heritage sites continue to support research and become place-based learning environments.

Arc/k actively promotes, teaches and shares photogrammetry to empower citizen scientists, volunteers, cultural heritage organizations and indigenous communities to document and archive their own cultural heritage in new and powerful ways, while adhering to ethical practices.

In training people to the highest possible standards based on their unique on the ground challenges, Arc/k believes this approach will allow the archives to not only be as data rich as possible, but will also dramatically increase the chances for these datasets to be used for future scholarly study and institutional sharing and archiving.

Arc/k has been working with technologies in regards to the artistic and cultural implications of what can be done using photogrammetry, LiDAR, 3D, and VR experiences to help empower people to be able to tell their own stories — to express their culture at scale.

Arc/k has worked with partners to document heritage sites around the world including Syria, Venezuela, UNESCO World Heritage sites, and museum collections. Explore our Archival Collections.

Services and Techniques:

- Photogrammetry

- Post processing techniques to derive 3D models

- Crowdsourced photogrammetry

- Drone

- LiDAR

- Photogrammetry and LiDAR combination

- Virtual Reality

- Social Virtual Reality

- Augmented Reality

Learn more about our archival practices and policies at the Arc/kivist’s Corner

What This Technology Can Accomplish

- Combating illicit trade of antiquities and looted artifacts

- Repatriation of Native American artifacts

- Saving the physical by creating a revenue source with digital heritage asset

- Teaching organizations and individuals techniques to digitally preserve heritage

- Sustainable democratization approach means more heritage is saved and preserved

- VR initiatives bringing scholars together around the world

- Social Virtual Reality experiences could lead to new breakthroughs

Photogrammetry

Definition:

Photogrammetry is the process of shooting and analyzing hundreds of photographs of a given subject and processing/calculating the images into an extremely accurate, textured, 3D digital model. The process is a beautiful fusion of mathematics, photography, and computer graphics. Arc/k has trained in techniques of photogrammetry for historical and cultural objects, and continues to optimize the technique for accuracy and excellence. This process can involve either still cameras or video cameras and multiple computers. Everything from high end to low end is applicable since photogrammetry is essentially an additive process and the 3D models it generates can be updated once better quality photos are found/taken. Arc/k currently uses mainly high-end equipment such as the Canon5DSR for still captures and the Red Weapon (with Helium sensor) 8k digital video camera for videogrammetry capture. This usually involves off-site locations or can be in-studio for smaller objects. For controlled lighting, Arc/k uses a constant lighting setup and/or strobe equipment. For inaccessible areas or large sites, drones and drone operators can also be employed.

Photogrammetry is the process of shooting and analyzing hundreds of photographs of a given subject and processing/calculating the images into an extremely accurate, textured, 3D digital model. The process is a beautiful fusion of mathematics, photography, and computer graphics. Arc/k has trained in techniques of photogrammetry for historical and cultural objects, and continues to optimize the technique for accuracy and excellence. This process can involve either still cameras or video cameras and multiple computers. Everything from high end to low end is applicable since photogrammetry is essentially an additive process and the 3D models it generates can be updated once better quality photos are found/taken. Arc/k currently uses mainly high-end equipment such as the Canon5DSR for still captures and the Red Weapon (with Helium sensor) 8k digital video camera for videogrammetry capture. This usually involves off-site locations or can be in-studio for smaller objects. For controlled lighting, Arc/k uses a constant lighting setup and/or strobe equipment. For inaccessible areas or large sites, drones and drone operators can also be employed.

To effectively capture a site with photogrammetry, Arc/k uses the following tools: a DSLR camera, a disto-laser distance measure, tracking markers, shutter remote, different scale bars, a color checker, and lighting equipment. With a route plan ready from an initial assessment, Arc/k sets up the shoot with lighting elements (if necessary) and set camera and lenses to the appropriate settings: ISO as low as possible: 100-500 are excellent, higher as needed; aperture: minimum of an f/8, higher is better; and shutter speed: low, while not letting the motion blur occur. Arc/k places a color checker and scale bars in the scene close to the subject, and shoots them as part of the photogrammetry solve. Matching color and matching scale measurement are important parts of the solve. Arc/k shoots many photos from many angles and many heights, shooting full rotations as low to the ground as possible and as high up as possible, with the help of a monopod. The goal is to try to overlap the images by 65-75% so the computer can see that it’s the same object in both shots just from a different angle and position.

Learn how to shoot and capture heritage assets in our Photogrammetry Tutorial

Definition:

Photogrammetry is the process of shooting and analyzing hundreds of photographs of a given subject and processing/calculating the images into an extremely accurate, textured, 3D digital model. The process is a beautiful fusion of mathematics, photography, and computer graphics. Arc/k has trained in techniques of photogrammetry for historical and cultural objects, and continues to optimize the technique for accuracy and excellence. This process can involve either still cameras or video cameras and multiple computers. Everything from high end to low end is applicable since photogrammetry is essentially an additive process and the 3D models it generates can be updated once better quality photos are found/taken. Arc/k currently uses mainly high-end equipment such as the Canon5DSR for still captures and the Red Weapon (with Helium sensor) 8k digital video camera for videogrammetry capture. This usually involves off-site locations or can be in-studio for smaller objects. For controlled lighting, Arc/k uses a constant lighting setup and/or strobe equipment. For inaccessible areas or large sites, drones and drone operators can also be employed.

To effectively capture a site with photogrammetry, Arc/k uses the following tools: a DSLR camera, a disto-laser distance measure, tracking markers, shutter remote, different scale bars, a color checker, and lighting equipment. With a route plan ready from an initial assessment, Arc/k sets up the shoot with lighting elements (if necessary) and set camera and lenses to the appropriate settings: ISO as low as possible: 100-500 are excellent, higher as needed; aperture: minimum of an f/8, higher is better; and shutter speed: low, while not letting the motion blur occur. Arc/k places a color checker and scale bars in the scene close to the subject, and shoots them as part of the photogrammetry solve. Matching color and matching scale measurement are important parts of the solve. Arc/k shoots many photos from many angles and many heights, shooting full rotations as low to the ground as possible and as high up as possible, with the help of a monopod. The goal is to try to overlap the images by 65-75% so the computer can see that it’s the same object in both shots just from a different angle and position.

Learn how to shoot and capture heritage assets in our Photogrammetry Tutorial

Post Processing Techniques

Once shooting completes, Arc/k ingests all footage at the in-house studio where the photos are subsequently processed on workstations. Arc/k’s networked workstations require large amounts of memory and strong graphics cards to process (terabytes of data) due to the size and nature of these still and video files. Parsing the data involves various software to make selects, and extract the data properly before processing begins. Arc/k shoots all images in raw file formats (such as .dng files) which are subsequently converted to .tiffs or jpegs. If third party images are used, raw images are shared with Arc/k and they get copied to a project folder on the server.

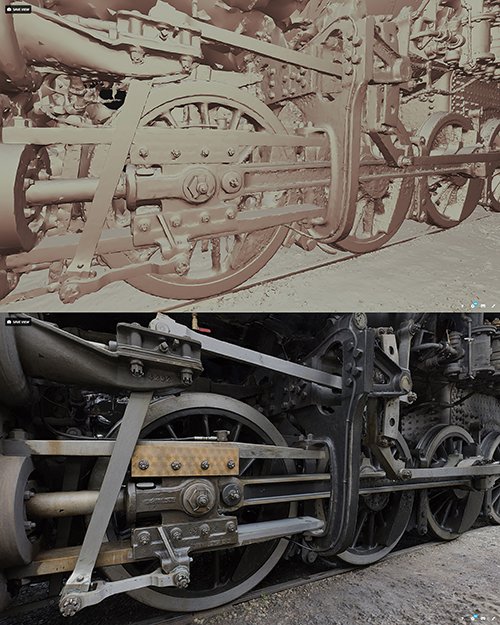

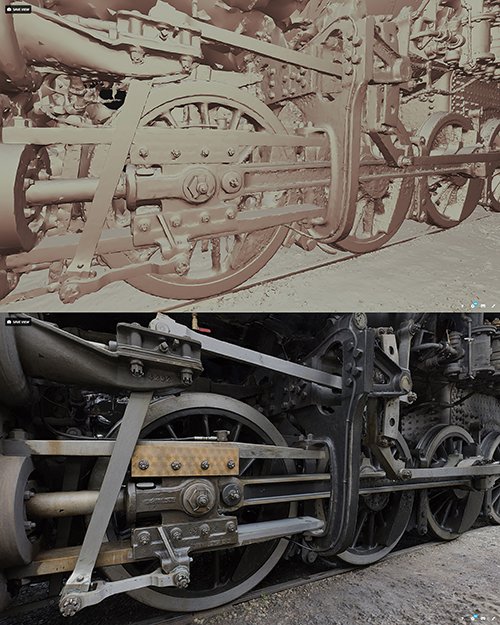

Arc/k begins processing images by importing select images into the Reality Capture software. The data goes through many phases such as image alignment, reconstruction, simplification, cleanup, UVs unwrap, and texturing in order to properly generate a 3D model. As little as 300 or as many as 30,000 images can make up a single model which is highly dependent on the object being captured and the photographer’s level of overlap. Once the initial alignment phase is finished, Arc/k has the positions for all images and generates a colored point cloud, in order to get a rough outline of the object or environment. Next, Arc/k defines a rectangular 3D bounding volume around the part of the specific cloud for solving, and then runs the next phase which converts the point cloud into a high resolution 3D mesh surface. Frequently the mesh generated initially is too large to be loaded into other programs so Arc/k does an intelligent decimation, using algorithms that remove topology where detail is low, but maintaining topology where detail is high. After simplifying the geometry, Arc/k cleans up any stray bits that aren’t part of the model. This cleaning is done by exporting the model into a common 3D format and using a tool such as Z-Brush. Then, Arc/k unwraps and applies UVs texture maps to the resulting mesh by defining how many textures and at what resolution to create them. Once the defined UV map is created, the texturing phase begins, using all the original images aligned to create a photorealistic 3D model. Arc/k typically uses a number of 8K texture maps which can be loaded into Sketchfab, and then exports the completed model in either OBJ or FBX 3D format.

Arc/k begins processing images by importing select images into the Reality Capture software. The data goes through many phases such as image alignment, reconstruction, simplification, cleanup, UVs unwrap, and texturing in order to properly generate a 3D model. As little as 300 or as many as 30,000 images can make up a single model which is highly dependent on the object being captured and the photographer’s level of overlap. Once the initial alignment phase is finished, Arc/k has the positions for all images and generates a colored point cloud, in order to get a rough outline of the object or environment. Next, Arc/k defines a rectangular 3D bounding volume around the part of the specific cloud for solving, and then runs the next phase which converts the point cloud into a high resolution 3D mesh surface. Frequently the mesh generated initially is too large to be loaded into other programs so Arc/k does an intelligent decimation, using algorithms that remove topology where detail is low, but maintaining topology where detail is high. After simplifying the geometry, Arc/k cleans up any stray bits that aren’t part of the model. This cleaning is done by exporting the model into a common 3D format and using a tool such as Z-Brush. Then, Arc/k unwraps and applies UVs texture maps to the resulting mesh by defining how many textures and at what resolution to create them. Once the defined UV map is created, the texturing phase begins, using all the original images aligned to create a photorealistic 3D model. Arc/k typically uses a number of 8K texture maps which can be loaded into Sketchfab, and then exports the completed model in either OBJ or FBX 3D format.

Once Arc/k has generated a model within the software, Arc/k then either posts that online or gives it to the partner directly as OBJ files or .FBX files. For XR based applications, Arc/k would create self-contained executable .exe files, allowing one to click on it to launch the experience. Much of Arc/k’s work is imported into Sketchfab for public display and to raise awareness of the history and cultural importance of 3D models of artifacts and locations. Usually Arc/k uploads the model into Sketchfab for previewing, either as a private object only visible to Arc/k (or to anyone with the link), or as a public object. If the model is an environment appropriate for putting into VR, then Arc/k creates a new VR project (often using a simple VR navigation template) and imports the model and ensures it has proper shader and attributes as well as basic lighting and correct scaling.

Image and scan data is always then archived for future reference. Often that original data is even shared through the web, or can be shared upon specific request.

Once shooting completes, Arc/k ingests all footage at the in-house studio where the photos are subsequently processed on workstations. Arc/k’s networked workstations require large amounts of memory and strong graphics cards to process (terabytes of data) due to the size and nature of these still and video files. Parsing the data involves various software to make selects, and extract the data properly before processing begins. Arc/k shoots all images in raw file formats (such as .dng files) which are subsequently converted to .tiffs or jpegs. If third party images are used, raw images are shared with Arc/k and they get copied to a project folder on the server.

Arc/k begins processing images by importing select images into the Reality Capture software. The data goes through many phases such as image alignment, reconstruction, simplification, cleanup, UVs unwrap, and texturing in order to properly generate a 3D model. As little as 300 or as many as 30,000 images can make up a single model which is highly dependent on the object being captured and the photographer’s level of overlap. Once the initial alignment phase is finished, Arc/k has the positions for all images and generates a colored point cloud, in order to get a rough outline of the object or environment. Next, Arc/k defines a rectangular 3D bounding volume around the part of the specific cloud for solving, and then runs the next phase which converts the point cloud into a high resolution 3D mesh surface. Frequently the mesh generated initially is too large to be loaded into other programs so Arc/k does an intelligent decimation, using algorithms that remove topology where detail is low, but maintaining topology where detail is high. After simplifying the geometry, Arc/k cleans up any stray bits that aren’t part of the model. This cleaning is done by exporting the model into a common 3D format and using a tool such as Z-Brush. Then, Arc/k unwraps and applies UVs texture maps to the resulting mesh by defining how many textures and at what resolution to create them. Once the defined UV map is created, the texturing phase begins, using all the original images aligned to create a photorealistic 3D model. Arc/k typically uses a number of 8K texture maps which can be loaded into Sketchfab, and then exports the completed model in either OBJ or FBX 3D format.

Once Arc/k has generated a model within the software, Arc/k then either posts that online or gives it to the partner directly as OBJ files or .FBX files. For XR based applications, Arc/k would create self-contained executable .exe files, allowing one to click on it to launch the experience. Much of Arc/k’s work is imported into Sketchfab for public display and to raise awareness of the history and cultural importance of 3D models of artifacts and locations. Usually Arc/k uploads the model into Sketchfab for previewing, either as a private object only visible to Arc/k (or to anyone with the link), or as a public object. If the model is an environment appropriate for putting into VR, then Arc/k creates a new VR project (often using a simple VR navigation template) and imports the model and ensures it has proper shader and attributes as well as basic lighting and correct scaling.

Image and scan data is always then archived for future reference. Often that original data is even shared through the web, or can be shared upon specific request.

Crowdsourced Photogrammetry

Definition:

Crowdsourced photogrammetry is a technique of analyzing thousands of photos to digitally reconstitute lost, damaged or destroyed sites in 3D and in virtual reality using photogrammetry software and technical expertise.

See Examples of Our Crowdsourced Photogrammetry

Cultural Heritage of Syria — 3D models created by Arc/k using crowdsourced photogrammetry.

Shah Mosque (Isfahan) in isfahan, Iran — 3D models created by Arc/k using crowdsourced photogrammetry.

Drone

Definition:

A drone, in technological terms, is an unmanned aircraft. Drones are more formally known as unmanned aerial vehicles (UAVs) or unmanned aircraft systems (UASes). Essentially, a drone is a flying robot that can be remotely controlled or fly autonomously through software-controlled flight plans in their embedded systems, working in conjunction with onboard sensors and GPS. For inaccessible areas or large heritage sites, drones and drone operators can also be employed.

See example: Palmyra Castle, located in the province of Homs, Syria, is listed as one of the UNESCO World Heritage sites in danger due to the Syrian civil war. Explore the crowdsourced photogrammetry of the castle generated from HD video footage of a paraglider circling above.

LiDAR

Definition:

LiDAR stands for “Light Detection and Ranging.” It is a surveying method that measures distance to a target by illuminating the target with pulsed laser light. It measures the reflected pulses with a sensor. Differences in laser return times and wavelengths are used to inform 3D representations of the target.

Arc/k sets up a LiDAR scanner at the recommended scanning distance of about 50 feet from the target capture area, and monitors the project and scans. It is important to make sure that each scan overlaps the previous one by about 50% to ensure accuracy when the scans get stitched together. After scanning sessions are completed, they check to see the scans’ results for balance, overlap, and alignment.

Arc/k sets up a LiDAR scanner at the recommended scanning distance of about 50 feet from the target capture area, and monitors the project and scans. It is important to make sure that each scan overlaps the previous one by about 50% to ensure accuracy when the scans get stitched together. After scanning sessions are completed, they check to see the scans’ results for balance, overlap, and alignment.

To process the laser scans, Arc/k imports the data into a desktop computer, saving the data in .E57 (general purpose/ point cloud) format. Then, Arc/k brings it into software ReCap Pro and ReCap Pro 360. Historic England’s Advice and Guidance on the Use of Laser Scanning in Archaeology and Architecture outlines the laser scan data process:

“In order to turn scan data into a useful product, the scans must first be registered, generally through the use of additional survey control measurements. Cleaning and filtering the data involves removing extraneous scan data from unwanted features, such as adjacent buildings, people, vegetation, obstructions, data through windows, etc. This exercise reduces the size of the dataset and should make registration more efficient.

“At the time of collection each scan has an arbitrary coordinate system, so the location has to be shifted and the axis orientated into the common coordinate system. This process is known as point cloud alignment or registration. If the common system is to be orientated to a real-world coordinate system then control points will also be required.”

Control Points are used when the software cannot detect enough correlation between scans or cameras to find a reasonable match. The User can then manually add “control points”, which are single points defined by the user within each solve to help generate a combined solution.

Definition:

LiDAR stands for “Light Detection and Ranging.” It is a surveying method that measures distance to a target by illuminating the target with pulsed laser light. It measures the reflected pulses with a sensor. Differences in laser return times and wavelengths are used to inform 3D representations of the target.

Arc/k sets up a LiDAR scanner at the recommended scanning distance of about 50 feet from the target capture area, and monitors the project and scans. It is important to make sure that each scan overlaps the previous one by about 50% to ensure accuracy when the scans get stitched together. After scanning sessions are completed, they check to see the scans’ results for balance, overlap, and alignment.

To process the laser scans, Arc/k imports the data into a desktop computer, saving the data in .E57 (general purpose/ point cloud) format. Then, Arc/k brings it into software ReCap Pro and ReCap Pro 360. Historic England’s Advice and Guidance on the Use of Laser Scanning in Archaeology and Architecture outlines the laser scan data process:

“In order to turn scan data into a useful product, the scans must first be registered, generally through the use of additional survey control measurements. Cleaning and filtering the data involves removing extraneous scan data from unwanted features, such as adjacent buildings, people, vegetation, obstructions, data through windows, etc. This exercise reduces the size of the dataset and should make registration more efficient.

“At the time of collection each scan has an arbitrary coordinate system, so the location has to be shifted and the axis orientated into the common coordinate system. This process is known as point cloud alignment or registration. If the common system is to be orientated to a real-world coordinate system then control points will also be required.”

Control Points are used when the software cannot detect enough correlation between scans or cameras to find a reasonable match. The User can then manually add “control points”, which are single points defined by the user within each solve to help generate a combined solution.

Photogrammetry and LiDAR Combination

Photogrammetry creates textually accurate 3D models but struggles with architectural spaces with multiple inner walls and varying depths. Unless all angles are photographed, then an accurate depiction of volume and space can’t be fully achieved through photogrammetry alone. Furthermore, it also requires a highly skilled operator for data processing.

LiDAR is an excellent bridge as it is also a 3D representation, though it lacks the ability to capture the texture of materials. The combination of these 3D technologies provide an accurate, immersive view of a heritage site.

Virtual Reality

Definition:

Virtual Reality is an artificial environment which is experienced through sensory stimuli (such as sights and sounds) provided by a computer and in which one’s actions partially determine what happens in the environment. Instead of viewing a screen in front of them, users are immersed and able to interact with 3D worlds or virtual artifacts using electronic devices, such as a keyboard and mouse, special goggles with a screen or 3D headset, and/or wired gloves. Sound can also be incorporated into the environment. Some include advanced haptic systems, which include tactile information, generally known as force feedback, in medical and gaming applications. The simulated environment can be similar to the real world, for example, simulations for pilot or medical training, or it can differ significantly from reality, as in Virtual Reality games.

See Examples of Our VR Experiences

A selection of Arc/k’s 3D models and VR experiences are displayed via a Content Management System, with all relevant metadata on Arc/k’s website and on Sketchfab. Additionally, Arc/k has created more robust Vive-based, immersive VR experiences, (with body tracking) which have been made available to the public and to institutions for specific exhibitions.

SketchFab offers viewing of VR experiences with a headset.

“Portals to the Past” (2018): An Arc/k VR experience, featuring Temple of Bel, the Arch of Triumph, The Roman Theatre, and the Fakhr-al-Din al-Ma’ani Castle in Palmyra, Syria.

The Palmyra VR experience debuted at ‘VR Los Angeles’ and at select conferences. It was presented as a Social VR experience (see below) at the University of Oklahoma Libraries in Oklahoma Virtual Academic Laboratory (OVAL) in partnership with Dr. Al-Azm of Shawnee State University.

2141 Steam Locomotive — 3D model created by Arc/k for Kamloops Heritage Railway.

This VR experience travelled to 13 libraries in Canada and received positive engagement from students.

Cultural Heritage & Environments of Iceland — 3D models created by Arc/k

Arc/k also created VR experiences of this unique ecosystem.

Apollo 11 Moon Landing VR Experience — created by Arc/k

This is best experienced in VR for the Vive or Oculus.

Social Virtual Reality

Definition:

Social VR (social virtual reality) is a 3-dimensional computer-generated space which must support visitors in VR headsets (and may also support non-VR users). The user is represented by an avatar. The purpose of the platform must be open-ended, and it must support communication between users sharing the same space. In almost all social VR platforms, the user is free to move around the space, and the content of the platform is completely or partially user-generated. — Ryan Schultz

Social VR hosts multiple people simultaneously in the same experience with a connection that is most likely Voice Chat, and seeing an avatar-like representation of the other participants. The ‘avatar’ is a very loose description of what we see of the other person. The most common and quintessential avatar would be a stylized head (possibly a sphere) and some feature or texture by which we might be able to tell where it is looking. A representation of hands is the second most common aspect of an avatar and helpful for conveying gestures and pointing. Sometimes, a Social VR will have a toon-like 3D body for the avatar, but this is less common and more challenging. Almost no VR technologies would be able to track the feet and body, and have sufficient capacity to render 15-20 avatars in one Social VR.

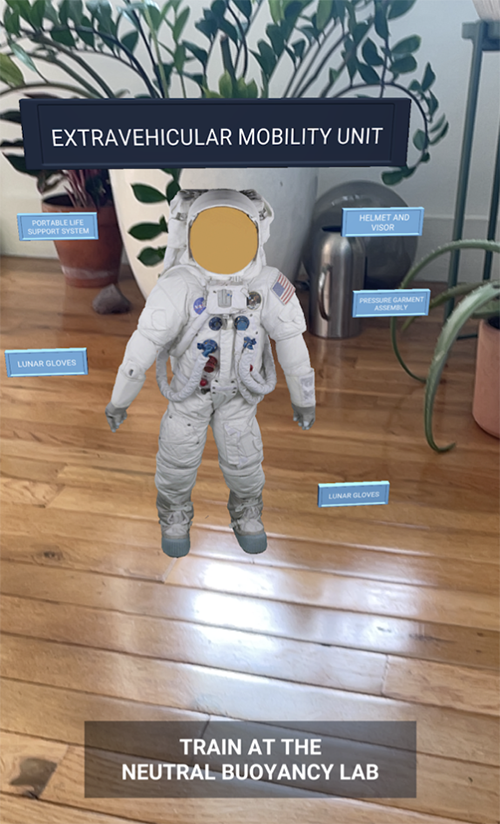

Augmented Reality

Definition:

Augmented reality works by making any digital viewer, such as a mobile device or a headset, a window that layers holograms over visible reality adding extra information or 3D graphics.

As a result, a sidewalk can have a path of virtual lights guiding a person to a destination, advertisements can appear above a shop door offering nightly specials and the virtual avatars of remote family members can appear to be sitting on the couch even as they’re miles away.

The Arc/k Project supports free educational AR mobile app Reach Across the Stars with our 3D asset US Spacesuit. The app uses AR to share the stories of female science heroes and is aimed at reaching middle school girls to promote an interest in STEM careers.

We provided our 3D model of the US Space Suit for the profile of American NASA astronaut Jessica Watkins, a geologist, aquanaut and former international rugby player. The project is produced in conjunction with Kimberly Arcand and her team from NASA’s Chandra Observatory, with funding by the Smithsonian’s Because of Her Story Initiative.

The Arc/k Project | NASA’s Chandra Observatory | the Smithsonian Institute

GET SOCIAL

Sign up to receive updates on our newest models, events we’re attending, new programs, ways to get involved, and more!